http://www.mssqltips.com/sqlservertip/2487/ssis-interview-questions-on-transactions-event-handling-and-validation/

Problem

When preparing for your next SSIS interview be sure to understand what could be questions asked in interview. In this tip series, I will try to cover as much as I can to help you prepare better for SSIS interview. This tip covers transactions, event handling and validations SQL Server Integration Services interview questions.

Solution

What is the transaction support feature in SSIS?

- When you execute a package, every task of the package executes in its own transaction. What if you want to execute two or more tasks in a single transaction? This is where the transaction support feature helps. You can group all your logically related tasks in single group. Next you can set the transaction property appropriately to enable a transaction so that all the tasks of the package run in a single transaction. This way you can ensure either all of the tasks complete successfully or if any of them fails, the transaction gets roll-backed too.

What properties do you need to configure in order to use the transaction feature in SSIS?

- Suppose you want to execute 5 tasks in a single transaction, in this case you can place all 5 tasks in a Sequence Container and set the TransactionOption and IsolationLevel properties appropriately.

- The TransactionOption property expects one of these three values:

- Supported - The container/task does not create a separate transaction, but if the parent object has already initiated a transaction then participate in it

- Required - The container/task creates a new transaction irrespective of any transaction initiated by the parent object

- NotSupported - The container/task neither creates a transaction nor participates in any transaction initiated by the parent object

- The TransactionOption property expects one of these three values:

- Isolation level dictates how two more transaction maintains consistency and concurrency when they are running in parallel. To learn more about Transaction and Isolation Level, refer to this tip.

When I enabled transactions in an SSIS package, it failed with this exception: "The Transaction Manager is not available. The DTC transaction failed to start." What caused this exception and how can it be fixed?

- SSIS uses the MS DTC (Microsoft Distributed Transaction Coordinator) Windows Service for transaction support. As such, you need to ensure this service is running on the machine where you are actually executing the SSIS packages or the package execution will fail with the exception message as indicated in this question.

What is event handling in SSIS?

- Like many other programming languages, SSIS and its components raise different events during the execution of the code. You can write an even handler to capture the event and handle it in a few different ways. For example consider you have a data flow task and before execution of this data flow task you want to make some environmental changes such as creating a table to write data into, deleting/truncating a table you want to write, etc. Along the same lines, after execution of the data flow task you want to cleanup some staging tables. In this circumstance you can write an event handler for the OnPreExcute event of the data flow task which gets executed before the actual execution of the data flow. Similar to that you can also write an event handler for OnPostExecute event of the data flow task which gets executed after the execution of the actual data flow task. Please note, not all the tasks raise the same events as others. There might be some specific events related to a specific task that you can use with one object and not with others.

How do you write an event handler?

- First, open your SSIS package in Business Intelligence Development Studio (BIDS) and click on the Event Handlers tab. Next, select the executable/task from the left side combo-box and then select the event you want to write the handler in the right side combo box. Finally, click on the hyperlink to create the event handler. So far you have only created the event handler, you have not specified any sort of action. For that simply drag the required task from the toolbox on the event handler designer surface and configure it appropriately. To learn more about event handling, click here.

What is the DisableEventHandlers property used for?

- Consider you have a task or package with several event handlers, but for some reason you do not want event handlers to be called. One simple solution is to delete all of the event handlers, but that would not be viable if you want to use them in the future. This is where you can use the DisableEventHandlers property. You can set this property to TRUE and all event handlers will be disabled. Please note with this property you simply disable the event handlers and you are not actually removing them. This means you can set this value to FALSE and the event handlers will once again be executed.

What is SSIS validation?

- SSIS validates the package and all of it's tasks to ensure it has been configured correctly. With a given set of configurations and values, all the tasks and package will execute successfully. In other words, during the validation process, SSIS checks if the source and destination locations are accessible and the meta data about the source and destination tables are stored with the package are correct, so that the task will not fail if executed. The validation process reports warnings and errors depending on the validation failure detected. For example, if the source/destination tables/columns get changed/dropped it will show as error. Whereas if you are accessing more columns than used to write to the destination object this will be flagged as a warning. To learn about validationclick here.

Define design time validation versus run time validation.

- Design time validation is performed when you are opening your package in BIDS whereas run time validation is performed when you are actually executing the package.

Define early validation (package level validation) versus late validation (component level validation).

- When a package is executed, the package goes through the validation process. All of the components/tasks of package are validated before actually starting the package execution. This is called early validation or package level validation. During execution of a package, SSIS validates the component/task again before executing that particular component/task. This is called late validation or component level validation.

What is DelayValidation and what is the significance?

- As I said before, during early validation all of the components of the package are validated along with the package itself. If any of the component/task fails to validate, SSIS will not start the package execution. In most cases this is fine, but what if the second task is dependent on the first task? For example, say you are creating a table in the first task and referring to the same table in the second task? When early validation starts, it will not be able to validate the second task as the dependent table has not been created yet. Keep in mind that early validation is performed before the package execution starts. So what should we do in this case? How can we ensure the package is executed successfully and the logically flow of the package is correct? This is where you can use the DelayValidation property. In the above scenario you should set the DelayValidation property of the second task to TRUE in which case early validation i.e. package level validation is skipped for that task and that task would only be validated during late validation i.e. component level validation. Please note using the DelayValidation property you can only skip early validation for that specific task, there is no way to skip late or component level validation.

Problem

When preparing for SSIS interview it is important to understand what questions could be asked in the interview. In this tip series (tip 1, tip 2, tip 3), I will try to cover common SSIS interview questions to help you prepare for a future SSIS interview. In this tip we will cover interview questions related to the SSIS architecture and internals. Check it out.

Solution

What are the different components in the SSIS architecture?

- The SSIS architecture comprises of four main components:

- The SSIS runtime engine manages the workflow of the package

- The data flow pipeline engine manages the flow of data from source to destination and in-memory transformations

- The SSIS object model is used for programmatically creating, managing and monitoring SSIS packages

- The SSIS windows service allows managing and monitoring packages

- To learn more about the architecture click here.

How is SSIS runtime engine different from the SSIS dataflow pipeline engine?

- The SSIS Runtime Engine manages the workflow of the packages during runtime, which means its role is to execute the tasks in a defined sequence. As you know, you can define the sequence using precedence constraints. This engine is also responsible for providing support for event logging, breakpoints in the BIDS designer, package configuration, transactions and connections. The SSIS Runtime engine has been designed to support concurrent/parallel execution of tasks in the package.

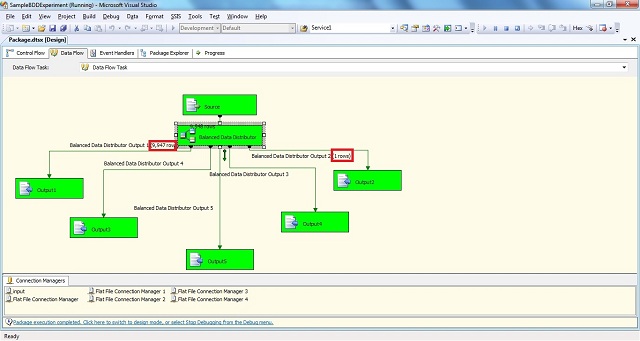

- The Dataflow Pipeline Engine is responsible for executing the data flow tasks of the package. It creates a dataflow pipeline by allocating in-memory structure for storing data in-transit. This means, the engine pulls data from source, stores it in memory, executes the required transformation in the data stored in memory and finally loads the data to the destination. Like the SSIS runtime engine, the Dataflow pipeline has been designed to do its work in parallel by creating multiple threads and enabling them to run multiple execution trees/units in parallel.

How is a synchronous (non-blocking) transformation different from an asynchronous (blocking) transformation in SQL Server Integration Services?

- A transformation changes the data in the required format before loading it to the destination or passing the data down the path. The transformation can be categorized in Synchronous and Asynchronous transformation.

- A transformation is called synchronous when it processes each incoming row (modify the data in required format in place only so that the layout of the result-set remains same) and passes them down the hierarchy/path. It means, output rows are synchronous with the input rows (1:1 relationship between input and output rows) and hence it uses the same allocated buffer set/memory and does not require additional memory. Please note, these kinds of transformations have lower memory requirements as they work on a row-by-row basis (and hence run quite faster) and do not block the data flow in the pipeline. Some of the examples are : Lookup, Derived Columns, Data Conversion, Copy column, Multicast, Row count transformations, etc.

- A transformation is called Asynchronous when it requires all incoming rows to be stored locally in the memory before it can start producing output rows. For example, with an Aggregate Transformation, it requires all the rows to be loaded and stored in memory before it can aggregate and produce the output rows. This way you can see input rows are not in sync with output rows and more memory is required to store the whole set of data (no memory reuse) for both the data input and output. These kind of transformations have higher memory requirements (and there are high chances of buffer spooling to disk if insufficient memory is available) and generally runs slower. The asynchronous transformations are also called "blocking transformations" because of its nature of blocking the output rows unless all input rows are read into memory. To learn more about it click here.

What is the difference between a partially blocking transformation versus a fully blocking transformation in SQL Server Integration Services?

- Asynchronous transformations, as discussed in last question, can be further divided in two categories depending on their blocking behavior:

- Partially Blocking Transformations do not block the output until a full read of the inputs occur. However, they require new buffers/memory to be allocated to store the newly created result-set because the output from these kind of transformations differs from the input set. For example, Merge Join transformation joins two sorted inputs and produces a merged output. In this case if you notice, the data flow pipeline engine creates two input sets of memory, but the merged output from the transformation requires another set of output buffers as structure of the output rows which are different from the input rows. It means the memory requirement for this type of transformations is higher than synchronous transformations where the transformation is completed in place.

- Full Blocking Transformations, apart from requiring an additional set of output buffers, also blocks the output completely unless the whole input set is read. For example, the Sort Transformation requires all input rows to be available before it can start sorting and pass down the rows to the output path. These kind of transformations are most expensive and should be used only as needed. For example, if you can get sorted data from the source system, use that logic instead of using a Sort transformation to sort the data in transit/memory. To learn more about it click here.

What is an SSIS execution tree and how can I analyze the execution trees of a data flow task?

- The work to be done in the data flow task is divided into multiple chunks, which are called execution units, by the dataflow pipeline engine. Each represents a group of transformations. The individual execution unit is called an execution tree, which can be executed by separate thread along with other execution trees in a parallel manner. The memory structure is also called a data buffer, which gets created by the data flow pipeline engine and has the scope of each individual execution tree. An execution tree normally starts at either the source or an asynchronous transformation and ends at the first asynchronous transformation or a destination. During execution of the execution tree, the source reads the data, then stores the data to a buffer, executes the transformation in the buffer and passes the buffer to the next execution tree in the path by passing the pointers to the buffers. To learn more about it click here.

- To see how many execution trees are getting created and how many rows are getting stored in each buffer for a individual data flow task, you can enable logging of these events of data flow task: PipelineExecutionTrees, PipelineComponentTime, PipelineInitialization, BufferSizeTunning, etc. To learn more about events that can be logged click here.

How can an SSIS package be scheduled to execute at a defined time or at a defined interval per day?

- You can configure a SQL Server Agent Job with a job step type of SQL Server Integration Services Package, the job invokes the dtexec command line utility internally to execute the package. You can run the job (and in turn the SSIS package) on demand or you can create a schedule for a one time need or on a reoccurring basis. Refer tothis tip to learn more about it.

What is an SSIS Proxy account and why would you create it?

- When we try to execute an SSIS package from a SQL Server Agent Job it fails with the message "Non-SysAdmins have been denied permission to run DTS Execution job steps without a proxy account". This error message is generated if the account under which SQL Server Agent Service is running and the job owner is not a sysadmin on the instance or the job step is not set to run under a proxy account associated with the SSIS subsystem. Refer tothis tip to learn more about it.

How can you configure your SSIS package to run in 32-bit mode on 64-bit machine when using some data providers which are not available on the 64-bit platform?

- In order to run an SSIS package in 32-bit mode the SSIS project property Run64BitRuntime needs to be set to "False". The default configuration for this property is "True". This configuration is an instruction to load the 32-bit runtime environment rather than 64-bit, and your packages will still run without any additional changes. The property can be found under SSIS Project Property Pages -> Configuration Properties -> Debugging.